Single View Trigger Generator

The Single View Trigger Generator has 3 fundamental parts:

- The consumption of pr-update messages

- The strategy execution

- The production of

sv-triggermessages orpcrecords

For each part we will need to configure a set of environment variables and config maps.

Environment variables

The following table indicates all the available environment variables for you to customize the service to your needs.

When creating the service from the marketplace the following environment variables will be added for you with some default values but you still need to properly update them to make the service work

| Name | Required | Description | Default value |

|---|---|---|---|

| LOG_LEVEL | ✓ | Level to use for logging; to choose from: error, fatal, warn, info, debug, trace, silent | silent |

| MONGODB_URL | ✓ | MongoDB URL where the projections are stored | - |

| MONGODB_NAME | ✓ | MongoDB Database name where the projections are stored | - |

| EVENT_STORE_CONFIG_PATH | ✓ | Path to the Event Store Config file | - |

| EVENT_STORE_TARGET | ✓ | Kafka topic to send the sv-trigger messages or MongoDB collection to save the pc records | - |

| SINGLE_VIEW_NAME | ✓ | The name of the Single View | - |

| KAFKA_PROJECTION_UPDATES_FOLDER | ✓ | Path to the Kafka Projection Updates folder | - |

| ER_SCHEMA_FOLDER | ✓ | Path to the ER Schema folder | - |

| PROJECTION_CHANGES_SCHEMA_FOLDER | ✓ | Path to the Projection Changes Schema folder | - |

| MANUAL_STRATEGIES_FOLDER | - | Absolute path of custom strategy functions folder. These functions are used in __fromFile__ annotations inside the Kafka Projection Updates Configuration | - |

| TRIGGER_CUSTOM_FUNCTIONS_FOLDER | - | Absolute path of custom functions folder. These functions are used in __fromFile__ annotations inside the Projection Changes Schema Configuration. | - |

| CONTROL_PLANE_CONFIG_PATH | - | Starting from v3.3.0, is possible to configure Runtime Management. More details on the dedicated section | - |

| CONTROL_PLANE_BINDINGS_PATH | - | Starting from v3.3.0, is possible to configure Runtime Management. More details on the dedicated section | - |

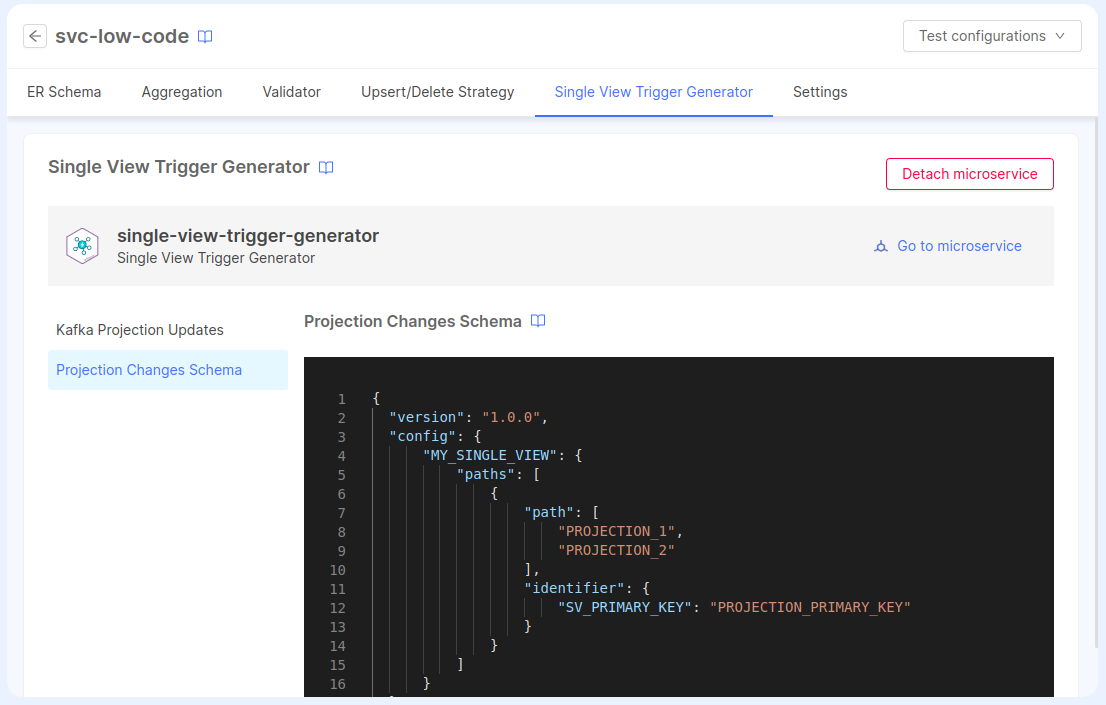

Attaching a Service to a Single View

To simplify the configuration of the Single View Trigger Generator service, you can attach a previously created Single View Trigger Generator service from the marketplace to a Single View. Here's how to do it:

- create your Single View and attach a Single View Creator Service to it from the dedicated configuration page. This is necessary because the Single View Trigger Generator can work with only one Single View Creator Service.

- within the Single View Creator tab of the Single View modal, enter the configuration page of the Single View Creator that you've configured.

- in the Single View Trigger Generator tab, you can choose a Single View Trigger Generator from the available services.

Once the service has been attached, you can manage the content of the Projection Changes Schema config map and the Kafka Projection Updates config map.

Any updates to these configurations will be reflected in the service config maps after saving the configuration.

Additionally, when a service is attached to a Single View, the ER Schema config map will be shared between the Single View Creator and the Single View Trigger Generator.

Also, the environment variable SINGLE_VIEW_NAME will be set automatically to the name of the Single View.

When a Single View Trigger Generator is attached to a Single View, the environment variable SINGLE_VIEW_NAME will be set to "read-only" mode, as well as the config maps for the ER Schema, Projection Changes Schema, and Kafka Projection Updates.

If you prefer to manually configure these services, you can always detach the service in the Single View Trigger Generator tab via the Detach microservice button on the top-right side of the page. After saving the configuration, the environment variable and the config maps will be again editable from the Microservices section.

Config Maps

The service can use the following 3 config maps:

When creating the service from the marketplace the following config maps will be created for you with some default values. The Event Store Config must be manually modified from the Microservice page to include all the missing configuration, but the ER Schema, the Projection Changes Schema and the Kafka Projection Updates configuration can be simplified by attaching the service to a Single View: in this case, the environment variables and config maps for these three config maps will be automatically managed by the application.

ER Schema

The ER Schema config map contains the erSchema.json file which describes the relationships between each projection of the System of Record.

Remember to copy/paste the mount path into the ER_SCHEMA_FOLDER environment variable so the service can read the file.

To know more on how to configure the file please go to the ER Schema page.

Projection Changes Schema

The Projection Changes Schema config map contains the projectionChangesSchema.json file which defines how to get to the base projection of the single view starting from the projection in which we received the ingestion message.

Remember to copy/paste the mount path into the PROJECTION_CHANGES_SCHEMA_FOLDER environment variable so the service can read the file.

If you need more info on how to configure the projectionChangesSchema.json file, please refer to the Projection Changes Schema page.

Kafka Projection Updates

The Kafka Projection Updates config map contains the kafkaProjectionUpdates.json file which defines the topics from where to consume the Projection Updates and the strategy to apply to each message.

Remember to copy/paste the mount path into the KAFKA_PROJECTION_UPDATES_FOLDER environment variable so the service can read the file.

If you need more info on how to configure the kafkaProjectionUpdates.json file, please refer to the Kafka Projection Updates page.

If you attach the service to a Single View, the Kafka Projection Updates config map will be read-only.

If you need to use manual strategies for one or more projections, you have to create an additional config map

having the manual strategies, that will be linked to the service by the usage of the MANUAL_STRATEGIES_FOLDER environment variable.

Event Store Config

The Event Store Config is a JSON file containing the configuration of the consumer and producer of the service itself and it has the following format:

{

"consumer": {

"kafka": {

// Kafka consumer configuration (see below)

}

},

"producer": {

"<kafka | mongo>": {

// Kafka or mongo producer configuration (see below)

}

}

}

Mind that only one producer and consumer must be configured at a time so the service knows which kind to use. Providing more than one consumer or producer will fail the configmap validation and shut down the service at start up.

The JSON configuration must be compliant to the following JSON Schema.

- Schema Viewer

- Raw JSON Schema

- Example

{

"type": "object",

"title": "Event Store Config",

"examples": [

{

"consumer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"consumerGroupId": "group-id",

"consumeFromBeginning": true,

"logLevel": "NOTHING"

}

},

"producer": {

"mongo": {

"url": "mongodb://localhost:27017",

"dbName": "pc-sv-books-test",

"maxBatchSize": 1000

}

}

}

],

"properties": {

"consumer": {

"title": "Consumer Configuration",

"type": "object",

"properties": {

"kafka": {

"title": "Kafka Consumer Configuration",

"allOf": [

{

"type": "object",

"title": "Kafka Connection Configuration",

"properties": {

"brokers": {

"anyOf": [

{

"type": "string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Secret from File",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

],

"description": "Kafka brokers"

},

"ssl": {

"title": "SSL Configuration",

"anyOf": [

{

"type": "boolean"

},

{

"type": "object",

"properties": {

"ca": {

"type": "string"

},

"key": {

"type": "string"

},

"passphrase": {

"anyOf": [

{

"type": "string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Secret from File",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

]

},

"cert": {

"type": "string"

}

},

"additionalProperties": true

}

],

"description": "Options for ssl certificates"

},

"sasl": {

"title": "SASL Configuration",

"type": "object",

"properties": {

"mechanism": {

"type": "string",

"enum": [

"plain",

"scram-sha-256",

"scram-sha-512"

]

},

"username": {

"anyOf": [

{

"type": "string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Secret from File",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

]

},

"password": {

"anyOf": [

{

"type": "string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Secret from File",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

]

}

},

"additionalProperties": true,

"description": "Authentication options"

},

"clientId": {

"type": "string",

"description": "Identifier of the application"

},

"connectionTimeout": {

"type": "number",

"description": "Time in milliseconds to wait for a successful connection"

},

"authenticationTimeout": {

"type": "number",

"description": "Timeout in ms for authentication requests"

},

"reauthenticationThreshold": {

"type": "number",

"description": "When periodic reauthentication is configured on the broker side, reauthenticate when reauthenticationThreshold milliseconds remain of session lifetime"

},

"requestTimeout": {

"type": "number",

"description": "Time in milliseconds to wait for a successful request"

},

"enforceRequestTimeout": {

"type": "boolean"

},

"retry": {

"title": "Retry mechanism Configuration",

"type": "object",

"properties": {

"maxRetryTime": {

"type": "number"

},

"initialRetryTime": {

"type": "number"

},

"factor": {

"type": "number"

},

"multiplier": {

"type": "number"

},

"retries": {

"type": "number"

}

},

"additionalProperties": true,

"description": "Retry message options for when message consumption fails, for more info https://kafka.js.org/docs/configuration#default-retry"

},

"logLevel": {

"type": "string",

"enum": [

"NOTHING",

"ERROR",

"WARN",

"INFO",

"DEBUG"

],

"description": "Logging level for kafka related logs"

}

},

"required": [

"brokers"

],

"additionalProperties": true

},

{

"title": "Consumer Configuration",

"type": "object",

"properties": {

"consumerGroupId": {

"type": "string",

"description": "Consumer group ID"

},

"consumeFromBeginning": {

"type": "boolean",

"default": false,

"description": "This determines whether the consumer will start consuming from offset 0 or it will wait for the next message that comes. This applies only when consumer group does not already have an offset on the topic"

}

},

"required": [

"consumerGroupId"

],

"additionalProperties": true

}

]

}

},

"required": [

"kafka"

],

"additionalProperties": true,

"description": "Only one key is allowed in this object as it will be used to determine the consumer's medium"

},

"producer": {

"title": "Producer Configuration",

"anyOf": [

{

"type": "object",

"title": "Kafka Producer Configuration",

"properties": {

"kafka": {

"allOf": [

{

"type": "object",

"title": "Kafka Connection Configuration",

"properties": {

"brokers": {

"anyOf": [

{

"type": "string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Secret from File",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

],

"description": "Kafka brokers"

},

"ssl": {

"title": "SSL Configuration",

"anyOf": [

{

"type": "boolean"

},

{

"type": "object",

"properties": {

"ca": {

"type": "string"

},

"key": {

"type": "string"

},

"passphrase": {

"anyOf": [

{

"type": "string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Secret from File",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

]

},

"cert": {

"type": "string"

}

},

"additionalProperties": true

}

],

"description": "Options for ssl certificates"

},

"sasl": {

"title": "SASL Configuration",

"type": "object",

"properties": {

"mechanism": {

"type": "string",

"enum": [

"plain",

"scram-sha-256",

"scram-sha-512"

]

},

"username": {

"anyOf": [

{

"type": "string",

"title": "Secret from string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

]

},

"password": {

"anyOf": [

{

"type": "string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Secret from File",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

]

}

},

"additionalProperties": true,

"description": "Authentication options"

},

"clientId": {

"type": "string",

"description": "Identifier of the application"

},

"connectionTimeout": {

"type": "number",

"description": "Time in milliseconds to wait for a successful connection"

},

"authenticationTimeout": {

"type": "number",

"description": "Timeout in ms for authentication requests"

},

"reauthenticationThreshold": {

"type": "number",

"description": "When periodic reauthentication is configured on the broker side, reauthenticate when reauthenticationThreshold milliseconds remain of session lifetime"

},

"requestTimeout": {

"type": "number",

"description": "Time in milliseconds to wait for a successful request"

},

"enforceRequestTimeout": {

"type": "boolean"

},

"retry": {

"type": "object",

"title": "Retry Mechanism",

"properties": {

"maxRetryTime": {

"type": "number"

},

"initialRetryTime": {

"type": "number"

},

"factor": {

"type": "number"

},

"multiplier": {

"type": "number"

},

"retries": {

"type": "number"

}

},

"additionalProperties": true,

"description": "Retry message options for when message consumption fails, for more info https://kafka.js.org/docs/configuration#default-retry"

},

"logLevel": {

"type": "string",

"enum": [

"NOTHING",

"ERROR",

"WARN",

"INFO",

"DEBUG"

],

"description": "Logging level for kafka related logs"

}

},

"required": [

"brokers"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Compression",

"properties": {

"compressionName": {

"type": "string",

"enum": [

"snappy",

"gzip",

"none"

],

"default": "none"

}

},

"additionalProperties": true

},

{

"type": "object",

"title": "Additional Configurations",

"properties": {

"maxBatchSize": {

"type": "number",

"description": "Max number of messages sent in each batch of the producer",

"default": 500

}

},

"additionalProperties": true

}

]

}

},

"required": [

"kafka"

],

"additionalProperties": false

},

{

"type": "object",

"title": "MongoDB Configuration",

"properties": {

"mongo": {

"allOf": [

{

"type": "object",

"title": "MongoDB Connection Configuration",

"properties": {

"url": {

"anyOf": [

{

"type": "string"

},

{

"type": "object",

"title": "Secret from Env",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"type": {

"type": "string",

"const": "env"

}

},

"required": [

"key",

"type"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Secret from File",

"properties": {

"encoding": {

"type": "string",

"enum": [

"base64"

]

},

"key": {

"type": "string"

},

"path": {

"type": "string"

},

"type": {

"type": "string",

"const": "file"

}

},

"required": [

"path",

"type"

],

"additionalProperties": true

}

],

"description": "Databse MongoDB URL in `mongodb://` format"

},

"dbName": {

"type": "string",

"description": "Databse name"

}

},

"required": [

"url",

"dbName"

],

"additionalProperties": true

},

{

"type": "object",

"title": "Additional Configurations",

"properties": {

"maxBatchSize": {

"type": "number",

"description": "Max number of messages sent in each batch of the producer",

"default": 500

}

},

"additionalProperties": true

}

]

}

},

"required": [

"mongo"

],

"additionalProperties": false

}

],

"description": "Only one key is allowed in this object as it will be used to determine the producer's medium"

}

},

"required": [

"consumer",

"producer"

],

"additionalProperties": true

}

{

"consumer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"consumerGroupId": "group-id",

"consumeFromBeginning": true,

"logLevel": "NOTHING"

}

},

"producer": {

"mongo": {

"url": "mongodb://localhost:27017",

"dbName": "pc-sv-books-test",

"maxBatchSize": 1000

}

}

}

Secrets Resolution

Starting from v3.3.1, the following fields can contain secrets that can be managed using the Fast Data Secrets Resolution mechanism:

consumer.kafka.['bootstrap.servers']consumer.kafka.['sasl.username']consumer.kafka.['sasl.password']consumer.kafka.['ssl.passphrase']consumer.kafka.['ssl.keystore.certificate.chain']producer.kafka.['bootstrap.servers']producer.kafka.['sasl.username']producer.kafka.['sasl.password']producer.kafka.['ssl.passphrase']producer.kafka.['ssl.key.password']producer.mongo.['url']

Consumers

At the moment you can only configure your consumer with kafka which will read pr-update messages from the Real-Time Updater. To configure it you must follow the JsonSchema specification above.

Producers

For the producers you can choose between two options: Kafka or MongoDB (sv-trigger vs. pc).

With MongoDB you will save Projection Changes on the DB just like the Real-Time Updater does. With Kafka instead it will send sv-trigger messages which will also be read by the Single View Creator by changing its configuration to do so.

To configure it you must follow the JsonSchema specification above.

Starting from version v3.1.6 of the SVTG, is possible to add into the Kafka producer configuration the property compressionName, to apply a particular encoding to sv-trigger messages. Allowed values are:

gzipsnappynone(default, if no options are provided)

While this option can be useful for messages having a large size, can increase the processing time due to the computational resources needed to apply the compression.

Examples

Below you can find a list of example configurations, based on the two different types of producers.

Kafka Consumer with MongoDB Producer

{

"consumer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"consumerGroupId": "group-id",

"consumeFromBeginning": true,

"logLevel": "NOTHING"

}

},

"producer": {

"mongo": {

"url": "mongodb://localhost:27017",

"dbName": "pc-sv-books-test"

}

}

}

Kafka Consumer with Kafka Producer

{

"consumer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"consumerGroupId": "group-id",

"consumeFromBeginning": true,

"logLevel": "NOTHING"

}

},

"producer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"logLevel": "NOTHING"

}

}

}

Kafka Consumer with Kafka Producer having Snappy compression

{

"consumer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"consumerGroupId": "group-id",

"consumeFromBeginning": true,

"logLevel": "NOTHING"

}

},

"producer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"logLevel": "NOTHING",

"compressionName": "snappy"

}

}

}

Kafka with Authentication

{

"consumer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"consumerGroupId": "group-id",

"consumeFromBeginning": true,

"sasl": {

"username": "my-username",

"password": "my-password",

"mechanism": "plain", // 'scram-sha-256', 'scram-sha-512'

},

"ssl": true,

"logLevel": "NOTHING"

}

},

"producer": {

"kafka": {

"brokers": "localhost:9092,localhost:9093",

"clientId": "client-id",

"logLevel": "NOTHING",

"ssl": true,

"sasl": {

"username": "my-username",

"password": "my-password",

"mechanism": "plain", // 'scram-sha-256', 'scram-sha-512'

}

}

}

}

Runtime Management

This feature is supported from version 3.3.0 of the Single View Trigger Generator.

By specifying the environment variables CONTROL_PLANE_CONFIG_PATH, you enable the SVTG to receive and execute the commands from the Runtime Management.

By design, every service interacting with the Control Plane starts up in a paused state, unless the Control Plane has already resumed the data stream before.

Therefore, when the SVTG starts up, the trigger generation will not start automatically.

In this case, you just need to send a resume command to one of the projections managed by the SVTG.

You can read about the setup of the Single View Trigger Generator in its dedicated section.