Audit Trail

Audit trail collects events happening in the systems inside your environment to provide observability and accountability.

Each audit log represents an event happened within a system and should include the following information, to answer the five Ws:

- who did it: the user or system who performed the operation and other users involved;

- what happened: which operation was performed (data is accessed or changed, etc.) and which resources were affected (a record was created, read, updated or deleted, etc.);

- why it happened: the operation scope (creation, deletion, consultation, aggregation, etc.);

- where it happened: which service triggered the event and generated the audit log and which one, if different, carried out the operation;

- when it happened: when the operation was performed (a timestamp).

For example, if a doctor creates an appointment with a patient using the Calendar component and the Appointment Manager, the audit log should include at least:

- who: the account ID of the doctor;

- what: the details about the insert operation performed on the CRUD Service, including:

- an identifier of the CRUD Service instance called by the Appointment Manager;

- the name of the collection where the appointment was created;

- the unique id of the appointment record created in the CRUD collection;

- the unique id of the patient involved in the appointment;

- why: the creation of an appointment;

- where: the services involved in the operation, including:

- the Calendar component, used by the doctor to create the appointment;

- the Appointment Manager, called by the component;

- the CRUD Service, called by the Appointment Manager to create the appointment record.

The audit trail is meant to provide enough information to answer common questions like:

- who accessed the medical records of a given patient in the last month;

- who changed a system configuration in the last 24 hours;

- which medical records were accessed or modified by a doctor in the last week;

- etc.

The following table provides a glossary for the most common terms and expressions used throughout this page. Unless stated otherwise, when you encounter any of these terms or expressions you should assume they have the stated meaning.

| Term | Definition |

|---|---|

| Audit Trail | The entire collection of audit logs. |

| Data Store | The system where the audit logs are stored (database, message queue, etc.). |

| Id | Unique identifier, globally or within a given namespace. |

| GCP | Acronym of Google Cloud Platform. |

| Namespace | One or more fields identifying a subsystem of the infrastructure (service, database, table, collection, etc.). |

| Operation | An activity performed on a system, by a user or another system acting as clients. |

| Resource | A database record or system component accessed or modified by an operation. |

| Source | The system where the event happens and is recorded in the audit trail. |

| System | A software service running in your cluster. |

| User | A physical person interacting with a software system. |

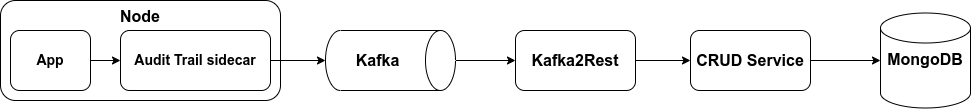

Architecture

Here's what happens when an audit log is generated by your microservice:

- the Audit Trail sidecar collects the audit logs generated by your application through a shared volume, enrich them and sends them to a Kafka topic;

- the Kafka2Rest service processes the logs from the Kafka topic and sends a

POSTrequest to the CRUD Service; - the CRUD Service saves the audit log in a MongoDB collection.

From here, you can easily query the audit logs according to your needs, for example building a frontend application using the Microfrontend composer.

Monitoring and alerting

Under certain conditions, especially where a lot of logs are generated, some logs may be lost (including audit logs).

Since the log file is stored on an ephemeral volume, if the pod is restarted the log file could be lost before the sidecar is able to finish processing its content. The sidecar is designed to automatically resume watching the application log from the beginning after is restarted, so it should collect correctly any log generated by the main application container while it was not available.

To avoid logs file becoming too large, the sidecar rotate logs automatically every day and keeps only the last five. Each time, the original log file is truncated in place after creating a copy, to minimize the risk of incoming logs not written to the file. There is still a chance that logs are written to the original file during the few milliseconds between the creation of the copy and the truncation of the original one.

Therefore, we recommend setting up proper alarms on your infrastructure to ensure the health of all the architecture components. If you are using the PaaS, you can leverage Grafana Alerting to monitor the health of the logging stack, the sidecars, Kafka2Rest and the CRUD Service.

For additional performance considerations, please take a look at the Performance tuning section.

Data model

The audit logs are enriched with metadata and normalized by the sidecar. This section provides an overview of its data model, which is inspired by the following standards and solutions:

To ensure semantical consistency across the logs generated by the different services running inside your projects and make it easier to query and aggregate logs from different sources, we provide a reference data model that you can use as starting point when passing structured data to your logs.

The main goal of having a shared data model is to enable aggregating and querying audit logs generated by heterogenous systems through a unified interface.

We encourage you to customize the data model to suit your specific needs while ensuring it includes enough information to be able to answer quickly and effectively to the most common questions mentioned at the beginning of this page.

Version

| Field name | Type | Required | RFC 3881 | FHIR | OpenTelemetry | GCP |

|---|---|---|---|---|---|---|

version | String | Yes | - | - | - | - |

The version of the audit log data model, to ensure backward and forward compatibility.

The value should adhere to semantic versioning.

{

"version": "1.0.0"

}

Timestamp

| Field name | Type | Required | RFC 3881 | FHIR | OpenTelemetry | GCP |

|---|---|---|---|---|---|---|

timestamp | String | Yes | Event Date/Time | recorded | Timestamp | timestamp |

A timestamp indicating when the event happened.

{

"timestamp": "2023-12-01T09:34:56.789Z"

}

Checksum

| Field name | Type | Required | RFC 3881 | FHIR | OpenTelemetry | GCP |

|---|---|---|---|---|---|---|

checksum | Object | Yes | - | - | - | - |

checksum.algorithm | String | Yes | - | - | - | - |

checksum.value | String | Yes | - | - | - | - |

An integrity checksum (checksum.value) computed using preferably the SHA-512 algorithm (checksum.algorithm) on the other log fields.

{

"checksum": {

"algorithm": "sha512",

"value": "b1f4aaa6b51c19ffbe4b1b6fa107be09c8acafd7c768106a3faf475b1e27a940d3c075fda671eadf46c68f93d7eabcf604bcbf7055da0dc4eae6743607a2fc3f"

}

}

Message

| Field name | Type | Required | RFC 3881 | FHIR | OpenTelemetry | GCP |

|---|---|---|---|---|---|---|

message | String | No | - | - | - | - |

The log message.

{

"message": "A log message"

}

Metadata

| Field name | Type | Required | RFC 3881 | FHIR | OpenTelemetry | GCP |

|---|---|---|---|---|---|---|

metadata | Object | Yes | - | - | Attributes | - |

metadata.event | String | No | Event ID | code | Attributes | - |

metadata.severity | String | No | - | severity | SeverityText | severity |

metadata.operation | String | No | Event Action Code | action | Attributes | - |

metadata.request | String | No | Network Access Point Identification | - | Attributes | HttpRequest |

metadata.resource | String | No | - | - | Attributes | - |

metadata.source | String | Yes | Audit Source ID | - | Attributes | - |

metadata.user | String | No | User ID | - | Attributes | - |

The metadata field is design to contain structured data passed to the logger and representing event metadata you can later query on MongoDB, like:

logger.audit({

event: 'AM/AppointmentCreated',

severity: 'info',

source: 'appointment-manager',

resource: 'AM/Appointment/appointment-12345',

user: 'dr.john.watson'

}, 'Appointment created')

which would be stored as:

{

"metadata": {

"event": "AM/AppointmentCreated",

"severity": "info",

"source": "appointment-manager",

"resource": "AM/Appointment/appointment-12345",

"user": "dr.john.watson",

},

"message": "Appointment created"

}

We recommend enforcing a common data model, to ensure you can correlate events and metadata originating from different sources. Each service can then add custom fields to provide context specific details.

In our plugins, we try to follow the common data schema specified in the table, with the following semantics:

event: type of event (API called, job executed, medical record updated, etc.);severity: the log level associated to the event, likedebug,info,warning,errorand so on;operation: type of operation performed (record created, read, accessed or deleted, etc.);request: the ID of the request triggering or originating the event;resource: unique identifier of the main resource affected by the operation (medical record ID, etc.);source: unique identifier of the application or system where the event occurs and the audit log is generated;user: unique identifier of the user who triggered the request.